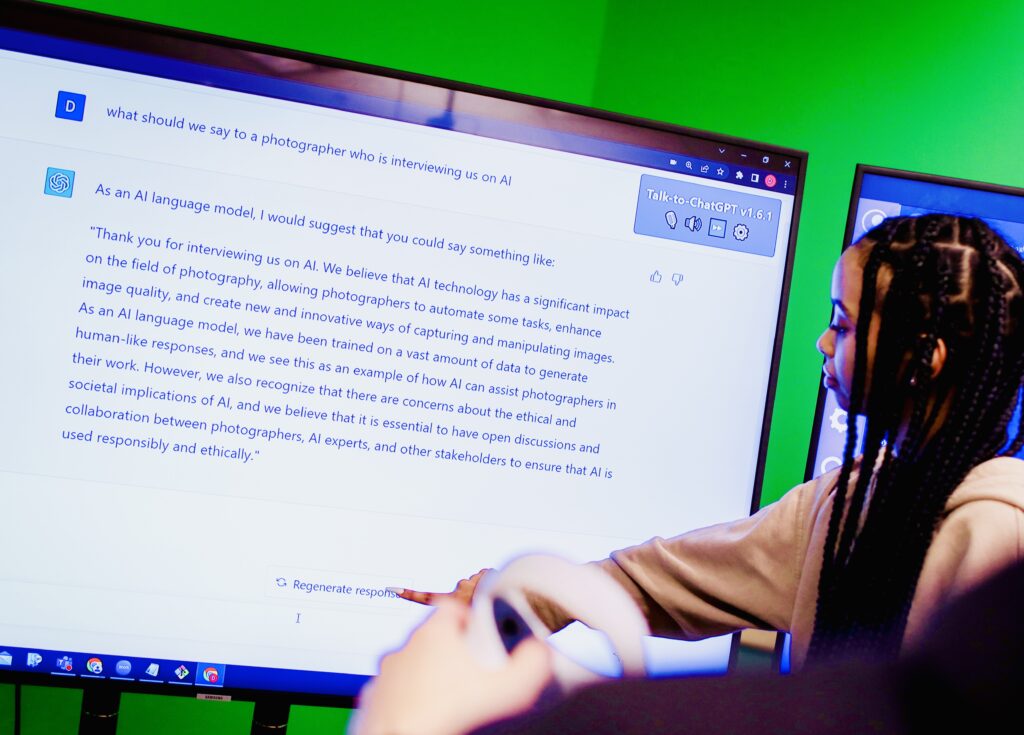

Meet ChatGPT and GitHub Copilot, two new artificial intelligence-powered teaching assistants (created by OpenAI and Microsoft, respectively) that are ready and available to help third-year engineering physics students at UBC who might be looking for help with their homework or assignments.

While students do have the option of talking to two real-life human TAs in the third-year course, they sometimes need help with a problem afterhours or have a quick question that can be easily answered by the AI TA. Having the option of an AI TA means students can finish their assignment more quickly without getting stuck on a simple question, which also frees up time for the human instructors so they are able to help students with more challenging questions, says instructor Ioan “Miti” Isbasescu. Isbasescu, the head of software systems in the UBC Engineering Physics project lab said, “software developers… are using AI to augment their abilities so they are now 10 times more productive.”

Using AI to enhance student learning

Rather than fear the technology, some UBC professors like Isbasescu are finding innovative ways to integrate it into their course curriculum to enhance student learning.

In Isbasescu’s course, students use AI and machine learning to learn how to control virtual cars driving around in a virtual environment. With ChatGPT’s recent release, he says this year he decided to explicitly instruct students to use AI when they generate code for labs and homework assignments. He believes it is important for students to learn how to use the technology for their assignments as they will need to know how to use it when they enter the workforce. “My course is like a sandbox where students get to play around with AI so they can experience the traps and dangers with these systems, but also learn how to use it in a way that can help them,” says Isbasescu. To reduce the risk of cheating, students who use the AI TA for their homework and lab assignments are required to give a weekly oral update to their instructor and the human TAs about how they used the tool for the assignment.

Challenging bias within AI technology

In collaboration with UBC’s Emerging Media Lab, Dr. Patrick Parra Pennefather teaches an emerging technology course in which some students have applied generative AI to prototype ideas related to emerging technology projects. Dr. Pennefather says he believes it is important that students learn how to use AI in a way that encourages critical thinking. He described an exercise in which students are asked to use an AI image generator to produce an image of a person working at a computer. No matter how many times the AI is prompted, it will more often than not produce an image of a white man, says Dr. Pennefather, referring to the phenomenon in which AI systems can exhibit biases that stem from their programming and data sources. As part of the exercise, Dr. Pennefather says he asks students to critically examine why the machine consistently produces the same image of a white man, rather than a person of colour, woman or non-binary individual.

“There’s no generic AI solution for everybody,” he says. “If you were to give the same assignment to 30 students, they’re all going to use AI differently. Reflecting on how they use it and how it informed their own creative process and documenting their own responses to content an AI generates is a good learning opportunity.

“That, to me, is one reason to not be afraid of it. Adapting your learning design to confront the role of generative AI in the learning process is important for what I teach.”

Visit the UBC News site to learn more.

Through Strategy 16: Public Relevance, UBC is working to align efforts more closely with priority issues in British Columbia and beyond through dialogue and knowledge exchange.